Muyao Yuan

I am Muyao Yuan (袁慕遥), a Ph.D. candidate in the MOEKLINNS Lab at Xi’an Jiaotong University, under the guidance of Prof. Weizhan Zhang. My research interests include model compression, model adaptation, and MLLM.

🔥 News

- 📅 [2025.11] 🎉 Our paper titled “InfoCLIP: Bridging Vision-Language Pretraining and Open-Vocabulary Semantic Segmentation via Information-Theoretic Alignment Transfer” has been accepted by AAAI 2026!

- 📅 [2025.05] 🎉 Our paper titled “InfoSAM: Fine-Tuning the Segment Anything Model from an Information-Theoretic Perspective” has been accepted as a Spotlight Poster at ICML 2025!

📝 Publications

| InfoCLIP: Bridging Vision-Language Pretraining and Open-Vocabulary Semantic Segmentation via Information-Theoretic Alignment Transfer. M. Yuan, Y. Zhang, W. Zhang, L. Ma, Y. Gao, J. Ying, Y. Xin In: AAAI Conference on Artificial Intelligence (AAAI), 2026 Project Page PDF |

| InfoSAM: Fine-Tuning the Segment Anything Model from an Information-Theoretic Perspective. Y. Zhang*, M. Yuan*, W. Zhang, T. Gong, W. Wen, J. Ying, W. Shi In: International Conference on Machine Learning (ICML Spotlight), 2025 Project Page PDF Code |

| Adaptive Token Selection for Efficient Detection Transformer with Dual Teacher Supervision. M. Yuan, W. Zhang, C. Yan, T. Gong, Y. Zhang, J. Ying Knowledge-Based Systems (KBS), 2024 PDF Code |

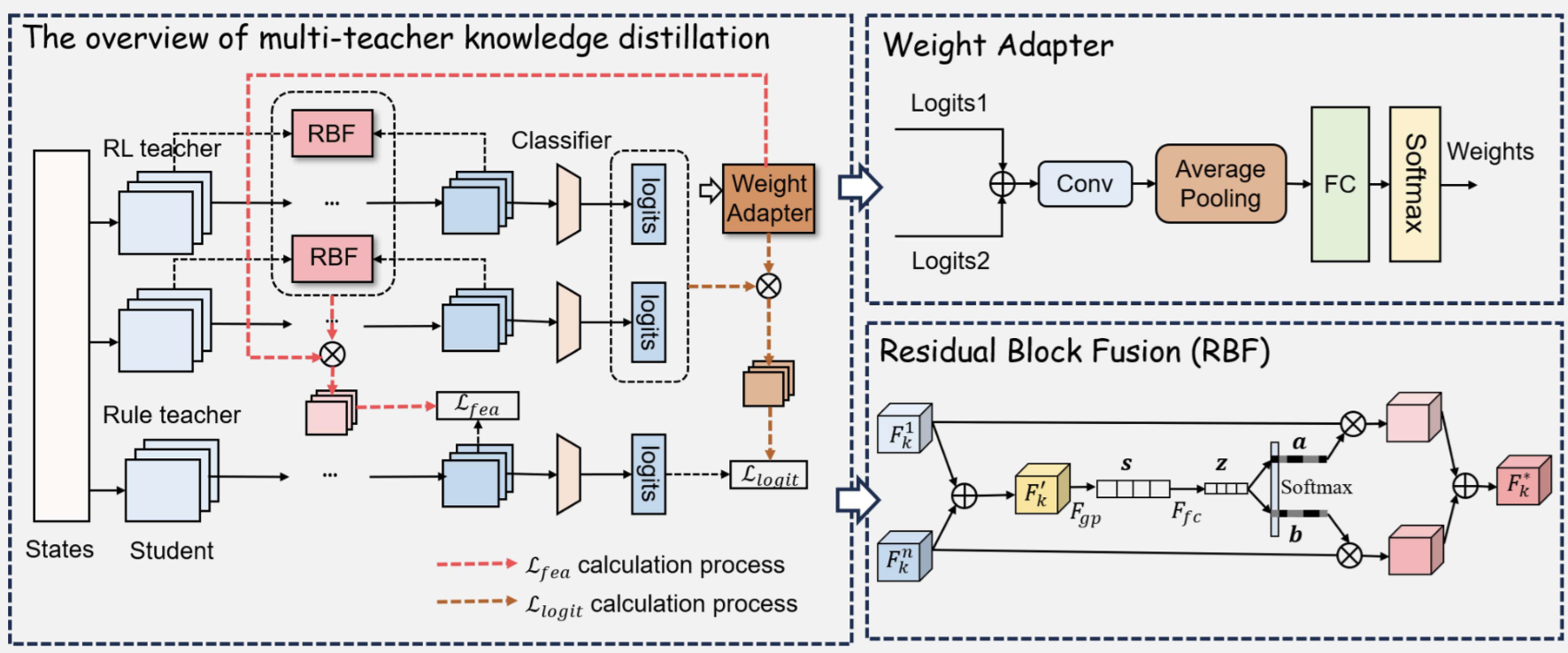

| Lightweight Configuration Adaptation with Multi-teacher Reinforcement Learning for Live Video Analytics. Y. Zhang, W. Zhang, M. Yuan, L. Xu, C. Yan, T. Gong, H. Du IEEE Transactions on Mobile Computing (TMC), 2025 |

🛠 Projects

| Efficient Training and Inference of Multimodal Foundation Models. Project Page |

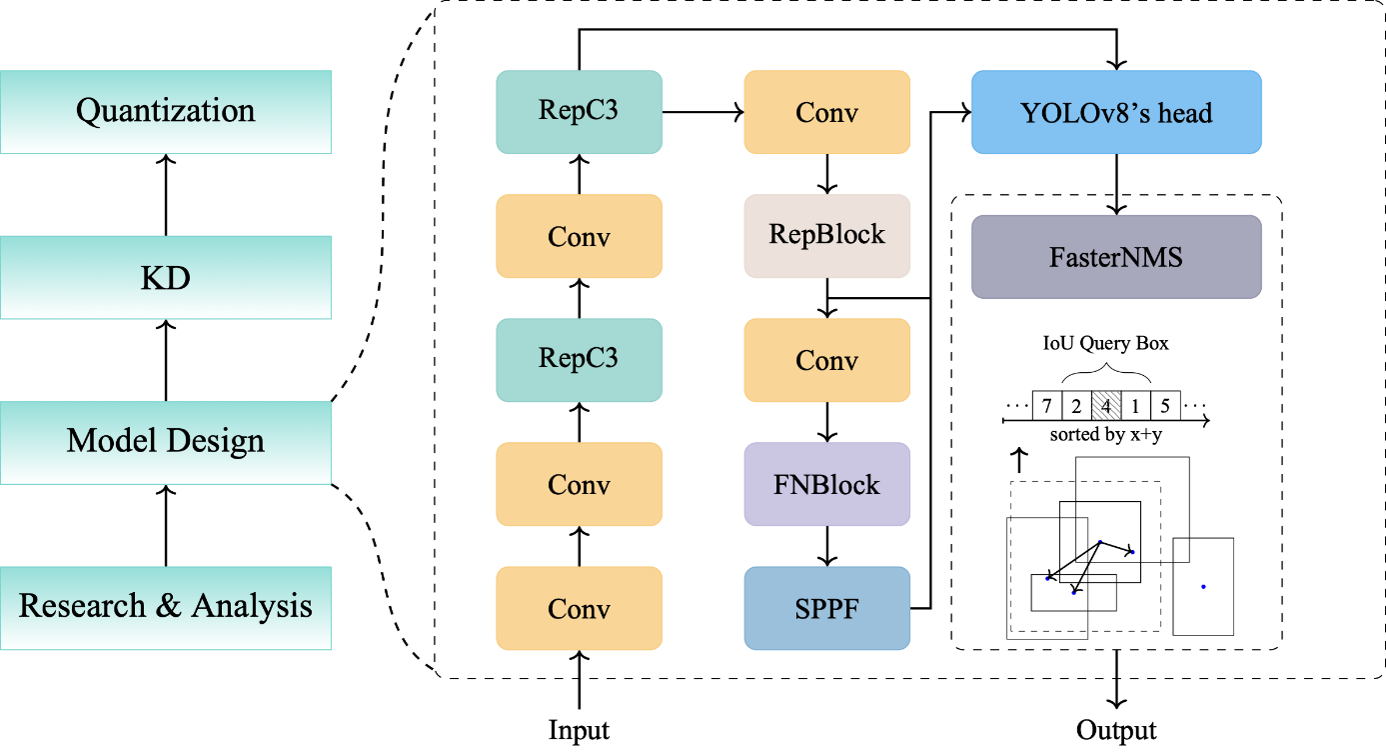

| Lightweight and Efficient Model Design for Diverse Tasks. Project Page |

💡 Selected Patents

- Resource-Efficient Training for MLLMs via Adaptive Data Filtering

- Efficient Collaborative Inference for Autoregressive MLLMs

- Device and Edge Collaborative Personalized Inference for MLLMs with Heterogeneous Resources

- Efficient MLLM Training via Expanded Pipeline Stages

- Self-Driven Feedback and Symbolic Collaboration for MLLM Inference

- LoRA and MoE-Based Fine-Tuning for MLLMs

- Elastic Architecture Design and Pruning for MLLMs with Heterogeneous Experts

- Efficient MLLM Initialization via Weight Inheritance

🏆 Selected Awards

- Special Class Academic Scholarship, Xi’an Jiaotong University, 2025.

- Excellent Postgraduate, Xi’an Jiaotong University, 2025.

- Outstanding Graduate, Xi’an Jiaotong University, 2022. [Certificate]

- First Prize Scholarship, Xi’an Jiaotong University, 2020. [Certificate]

- National Second Prize, WeChat Mini Program Development Competition, 2020. [Certificate]

- Samsung Scholarship, 2019. [Certificate]

- National Second Prize, Contemporary Undergraduate Mathematical Contest in Modeling (CUMCM), 2019. [Certificate]

- First Prize, Chinese Physics Olympiad (CPhO), 2017. [Certificate]

📖 Educations

2022.09 – now, Xi’an Jiaotong University — PhD Candidate in Computer Science

• Supervisor: Prof. Weizhan Zhang2018.09 – 2022.06, Xi’an Jiaotong University — B.S. in Automation

• GPA: 4.0/4.3 (Ranked 1th out of 180) [Transcript]

🏢 Internships

- 2023.10 – 2024.11, China Telecom Artificial Intelligence Technology, Beijing.

- 2025.10 – 2025.11, E-surfing Vision Technology, Shanghai.

🎓 Academic Service

- Reviewer for CVPR, AAAI, TMC, Neural Computation, Neural Networks.